Hello Juan

It’s good that you come to this realization, and sometimes it’s because we ask questions that we may later consider “stupid” but they’re not because they help us to come to these kinds of realizations!

Now concerning your next question, this is an excellent issue to consider, and it shows your critical thinking, and you’re on the right track.

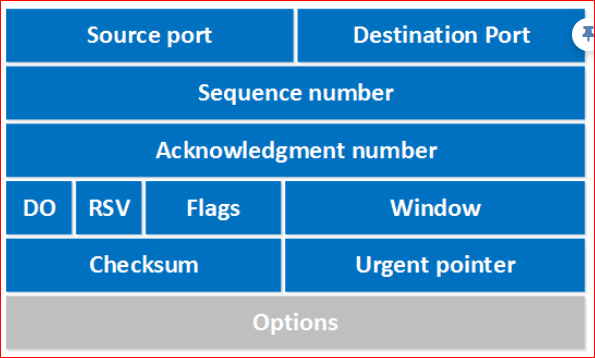

As described in a previous post, the MSS is a TCP level setting that determines the maximum amount of data that can be contained in each TCP segment. The MTU on the other hand, is a network interface layer setting that determines the maximum size of a packet that can be sent over a network link.

The purpose of larger MTU frames, such as 9000 Bytes (also known as Jumbo Frames), is to increase the efficiency of data transmission for certain types of network traffic. These larger frames mean fewer packets for a given amount of data, which reduces the overhead associated with each packet (like headers and acknowledgments) and can improve network performance.

Now, you’re correct that if the MSS is 1460 Bytes, a 9000 Byte MTU you wouldn’t be able to take advantage of this efficiency. You wouldn’t actually leave “empty room” in the sense that the frames being sent will be limited to 1500. They wouldn’t actually be 9000 bytes long with 7500 just empty. So it’s not that detrimental to the efficiency. However, TCP can automatically set the MSS to take advantage of the greater MTU sizes.

The MSS is negotiated during the TCP three-way handshake, and if the path between the sender and receiver can handle these large MTU sizes from end to end, TCP detects this and negotiates a large enough MSS that can take advantage of the capabilities of the underlying infrastructure.

However, in order for this to be successful, the full path from end to end must support large MTU sizes. If there is even one switch or router in the path that has a 1500 MTU, that MTU will be used to calculate the MSS.

Also, it’s important to note that not all network traffic consists of TCP segments that are subject to the MSS. For example, UDP traffic and ICMP messages are not subject to the MSS, so they can take full advantage of a larger MTU without any adjustments.

But remember, larger MTU sizes aren’t always better. They can cause issues with network devices that don’t support them, and they can increase the impact of packet loss. So it’s important to consider the characteristics of your specific network before deciding to use Jumbo Frames or increase the MSS. When and how it will be used will typically be part of extensive network design processes that should choose the most appropriate configuration.

I hope this has been helpful!

Laz

![]()