Thank you very much Lazaros!

Hi!

Rene, on your first scheme routing table is in Control Plane, but later you mention that TCAM stores routing table and TCAM is in Data Plane hardware (ASIC). I am confused in that thing…

Hello Artem

The first diagram where the routing table is in the Control Plane shows the functionality of a traditional device. This is how the tasks were divided in the past.

In the second instance, it is describing high speed layer three swithces, which have moved the routing table into the TCAM for higher speeds. This is how high speed routing can be achieved in layer three switches.

I hope this has been helpful!

Laz

Please correct me if my understanding of Control Plane and Dat Plane is wrong.

Control Plane: This is where Layer-3 forwarding decisions are looked up. Routing table and ARP table are present in the control plane. Only Routers and Layer-3 switches have the control plane present.

Data Plane: It where Layer-2 forwarding decisions are made. This is Hardware based (ASICs). In case of a Layer-2 switch, it simply has data plane. But in case of a Layer-3 switch/ Router, it peels off the MAC header and checks for the IP Address. This IP Address lookup is done again in the control plane.

However, modern L-3 switches are able to make all the decisions from the data plane itself due to the FIB and TCAM.

Hello Rosna

You’re almost there! Think about it this way. The control plane deals with HOW to operate. This includes routing protocols, their operation and the exchange of their packets, ARP, HSRP, CDP, forwarding mechanisms, CEF and the like. Control plane packets are originated by and destined to intermediary devices such as routers. They never reach the end user.

Data plane has to do with exactly that: DATA! It is the part of the network that actually moves the user data from source to destination. A simple way to think about it is that this is anything that goes through the router and not to the router.

This is a nice thread from the Cisco Learning Network that uses an analogy to explain it more clearly.

I hope this has been helpful!

Laz

Blockquote For example an IP packet with destination address 192.168.20.44 will match:

- 168.20.44 /32

- 168.20.0 /24

- 168.0.0 /16

Is this just a typo and 192.168.20.44/32, 192.168.20.0/24, 192.168.0.0/16 is meant? Or is there some significance to omitting the first octet?

Hello Arun

Yes, that is a typo. Thanks for catching that. I’ll let Rene know.

Thanks again!

Laz

In order to see some of the output from the command show adjacency detail, would CDP have to be enabled? It seems like that would be the case, but maybe there’s something else going on behind the scenes with CEF.

Hello Andy

CDP is not a prerequisite for the show adjacency detail command. You can find out more about this command at the following command reference document:

I hope this has been helpful!

Laz

Thanks Lazaros. I’ll take a look at that link.

Hi Rene and staff,

i read the forum of this lesson and i want to come back on the question “with fast switching how IOS determines that a packet is in a known flow ?”

What determines flow ? If you need to look at IP src and dest in the L3 header to determine flow, you need CPU (?) (this is less than for all process switching)

But i cant imagine that Cisco did not find a better way (with hash or something like that)

Did only Cisco know about the way IOS determine an IP flow, or did Cisco share this information ?

Regards

Hello Dominique

As you correctly stated, the flow is identified using the appropriate IP source and destination information in the L3 header. Now fast switching still uses CPU, however, it uses it much more efficiently than when implementing process switching.

As mentioned in the lesson, after a packet has been identified as being the first in a specific flow, information about how to reach the destination is stored in a fast-switching cache. What this does is it eliminates the need for the CPU to lookup the route again in the routing table (which may have hundreds of entries). It just has to look it up in the cache, which is much smaller. The next hop information can be reused again and again from this cache.

Fast switching and CEF are Cisco proprietary procedures, however, other vendors have their own versions of such high performance switching techniques. I’m not sure if there is a better way or if Cisco has shared their algorithms with others.

I hope this has been helpful!

Laz

Hello @lagapidis,

so how will the routing table communicate with the fast switching’s cache? Let’s say the next hop has been updated or something.

Hello sales2161

If a routing table entry changes, and a packet comes in that will be affected by this change, the packet goes through normal process switching procedure. This is because the fast switching cache doesn’t yet have an entry that matches this particular new routing entry. As per normal operation, the first routing of such a packet will place a new fast switching entry into the cache that will be used for all subsequent packets that match this routing entry.

Now any unused fast switching cache entries eventually become invalid. By default, if a cache entry is not used for two minutes, it becomes invalid. If an invalid entry is not used for another minute, it is purged from the cache. If during that one minute the entry is used, it will become active again.

The period of time that valid fast switch cache entries must be inactive before the router invalidates them can be configured. You can also specify after how long an invalid entry remains before being purged. Finally, you can also specify the number of cache entries that the router can invalidate per minute.

You can find more info about this at the following Cisco documentation:

I hope this has been helpful!

Laz

Hi Rene,

What is the equivalent of other vendors for CEF.. what is it called in other vendors

Hello Rahul

Juniper’s equivalent is called JTREE while Huawei calls it Fast Forwarding. You can further research it for other vendors as well. ![]()

I hope this has been helpful!

Laz

Hi Guys - I understand that the the FIB is in the data plane. Is the adjacency table also located in the data plane? Thanks - Gareth.

Hello Gareth

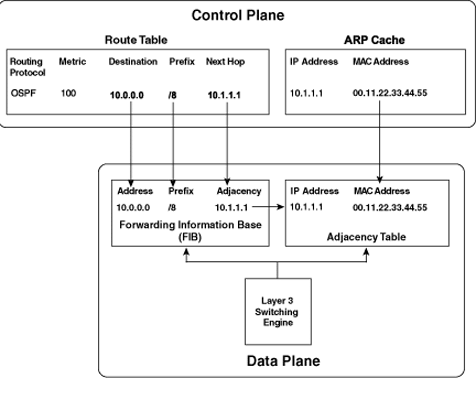

The adjacency table, just like the FIB is in the data plane. It is part of the forwarding engine of a router, rather than part of the Routing engine. This diagram should make the architecture clearer:

I hope this has been helpful!

Laz

Hi Rene and Laz,

So with Cisco devices , CEF is enable default. We do nothing there ?

So if we disable CEF, router or switch will using Processing switching or Fast switching and can we see or found any delay on network or somthing else ?

An other question, i using router ISR 4431 , when configure IP CEF have some option and how to know and best practise for it ?

R1(config)#ip cef ?

accounting Enable CEF accounting

distributed Distributed Cisco Express Forwarding

load-sharing Load sharing

optimize Optimizations

traffic-statistics Enable collection of traffic statistics1#do show ver

Cisco IOS XE Software, Version 16.06.04

Cisco IOS Software [Everest], ISR Software (X86_64_LINUX_IOSD-UNIVERSALK9-M), Version 16.6.4, RELEASE SOFTWARE (fc3)

Technical Support: http://www.cisco.com/techsupport

Copyright (c) 1986-2018 by Cisco Systems, Inc.

Compiled Sun 08-Jul-18 04:33 by mcpre

Hi Rene,

My Router just config and no data through it, no devices connect to it. I enter comand " show cef memory "

I see almost value is very high ? Is it normal. I remember threshold RAM , CPU is about <80 % is good. So how about it ?

R1#show cef memory

Memory in use/allocated Count

ADJ: GSB : 468/560 ( 83%) [1]

ADJ: NULL adjacency : 436/528 ( 82%) [1]

ADJ: adj sev context : 264/448 ( 58%) [2]

ADJ: adj_nh : 400/584 ( 68%) [2]

ADJ: adjacency : 3408/3776 ( 90%) [4]

ADJ: mcprp_adj_inject_sb : 18180/18272 ( 99%) [1]

ADJ: request resolve : 4632/4816 ( 96%) [2]

ADJ: sevs : 440/624 ( 70%) [2]

CEF: Brkr Updat : 12072/12808 ( 94%) [8]

CEF: Brkr Update Rec : 320/504 ( 63%) [2]

CEF: Brkr zone : 908/2472 ( 36%) [17]

CEF: Broker : 4964/6528 ( 76%) [17]

CEF: Brokers Array : 188/280 ( 67%) [1]

CEF: EVENT msg chunks : 332/424 ( 78%) [1]

CEF: FIB LC array : 364/456 ( 79%) [1]

CEF: FIB subtree context : 156/432 ( 36%) [3]

CEF: FIBHWIDB : 3240/4160 ( 77%) [10]

CEF: FIBIDB : 5400/6320 ( 85%) [10]

CEF: IPv4 ARP throttle : 2052/2144 ( 95%) [1]

CEF: IPv4 process : 1164/1808 ( 64%) [7]

CEF: OCE get hash callbac : 52/144 ( 36%) [1]

CEF: Protocol Discard sub : 904/1640 ( 55%) [8]

CEF: TABLE msg chunks : 892/984 ( 90%) [1]

CEF: Table rate Monitor S : 240/608 ( 39%) [4]

CEF: arp throttle chunk : 32168/32352 ( 99%) [2]

CEF: cover need : 1840/2208 ( 83%) [4]

CEF: cover need deagg chu : 376/560 ( 67%) [2]

CEF: dQ elems : 384/568 ( 67%) [2]

CEF: dQ walks : 536/720 ( 74%) [2]

CEF: fib : 25576/26128 ( 97%) [6]

CEF: fib GSB : 23340/23800 ( 98%) [5]

CEF: fib deps : 392/576 ( 68%) [2]

CEF: fib loop sb : 368/552 ( 66%) [2]

CEF: fib_fib_co : 672/856 ( 78%) [2]

CEF: fib_fib_covered chun : 392/576 ( 68%) [2]

CEF: fib_fib_rp_bfd_sb : 2728/2912 ( 93%) [2]

CEF: fib_fib_sr : 4584/5504 ( 83%) [10]

CEF: fib_fib_src_adj_sb : 392/576 ( 68%) [2]

CEF: fib_fib_src_adj_sb_a : 360/544 ( 66%) [2]

CEF: fib_fib_src_interfac : 368/552 ( 66%) [2]

CEF: fib_fib_src_rr_sb : 400/584 ( 68%) [2]

CEF: fib_fib_src_special_ : 376/560 ( 67%) [2]

CEF: fib_head_s : 664/848 ( 78%) [2]

CEF: fib_head_sb chunk : 384/568 ( 67%) [2]

CEF: fib_member : 2584/2952 ( 87%) [4]

CEF: fib_member_sb chunk : 368/552 ( 66%) [2]

CEF: fib_rib_route_update : 5184/5368 ( 96%) [2]

CEF: fib_table_fibswsb_de : 488/672 ( 72%) [2]

CEF: fibhwidb table : 131076/131168 ( 99%) [1]

CEF: fibhwsb ctl : 368/552 ( 66%) [2]

CEF: fibidb table : 131076/131168 ( 99%) [1]

CEF: fibswsb ct : 11632/12000 ( 96%) [4]

CEF: fibswsb ctl : 1680/1864 ( 90%) [2]

CEF: hash table : 262152/262336 ( 99%) [2]

CEF: ipv6 feature error c : 9188/9280 ( 99%) [1]

CEF: ipv6 feature error s : 9188/9280 ( 99%) [1]

CEF: ipv6 not cef switche : 1276/1368 ( 93%) [1]

CEF: ipv6 not cef switche : 1276/1368 ( 93%) [1]

CEF: loadinf16 : 928/1112 ( 83%) [2]

Memory in use/allocated Count

CEF: loadinfo2 : 440/624 ( 70%) [2]

CEF: ml nh tracer : 368/552 ( 66%) [2]

CEF: mpls long path exts : 648/832 ( 77%) [2]

CEF: mpls path exts : 440/624 ( 70%) [2]

CEF: nh entry context : 440/624 ( 70%) [2]

CEF: nh entry params : 384/568 ( 67%) [2]

CEF: non_ip entry context : 440/624 ( 70%) [2]

CEF: pathl : 3816/4368 ( 87%) [6]

CEF: pathl ifs : 2208/2760 ( 80%) [6]

CEF: pathl its : 648/832 ( 77%) [2]

CEF: pathloutputchain : 792/976 ( 81%) [2]

CEF: paths : 4672/5224 ( 89%) [6]

CEF: plist dq it : 512/696 ( 73%) [2]

CEF: prefix query msg chu : 2724/2816 ( 96%) [1]

CEF: subtree context : 1160/1712 ( 67%) [6]

CEF: table : 1736/2104 ( 82%) [4]

CEF: table GSB : 800/984 ( 81%) [2]

CEF: table walks : 440/624 ( 70%) [2]

CEF: terminal fibs list : 128/312 ( 41%) [2]

CEF: test fib entry sbs : 376/560 ( 67%) [2]

CEF: up event c : 408/592 ( 68%) [2]

CEF: up event chunk : 232/416 ( 55%) [2]

CEF: v6 nd discard thrott : 4100/4192 ( 97%) [1]

CEF: v6 nd throttle chunk : 19368/19552 ( 99%) [2]

CEF: vrf : 2904/3088 ( 94%) [2]

COLL: coll rec : 368/552 ( 66%) [2]

TAL: MTRIE n08 : 131904/132272 ( 99%) [4]

TAL: control block : 400/768 ( 52%) [4]

TAL: item list elem : 232/416 ( 55%) [2]

TAL: mtrie control block : 5340/5616 ( 95%) [3]

TAL: rtree aux : 300/576 ( 52%) [3]

TAL: rtree control block : 180/456 ( 39%) [3]

TAL: rtree nodes : 848/1032 ( 82%) [2]

TAL: tree control : 448/1000 ( 44%) [6]

Totals : 916444/942848 ( 97%) [287]