This topic is to discuss the following lesson:

Hello Rene,

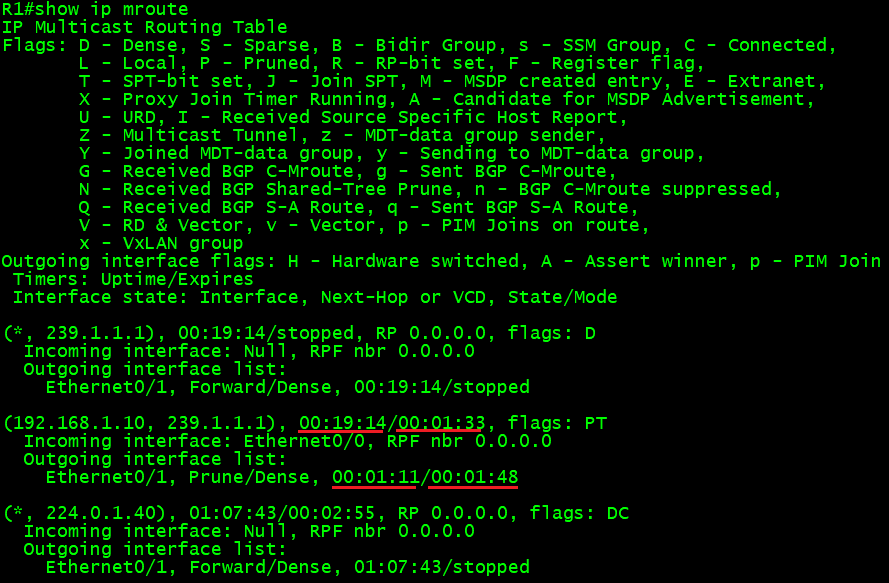

The explanation under the r1#show ip mroute 239.1.1.1:

“R1 has been receiving this traffic for one minute and 16 seconds.” I think R1 has been sending the information out gi0/1

"R1 is forwarding traffic on its Gi0/1 interface that is connected to R1. " I think there is a typo, R1 is connected on its gi0/1 to R2

Hi Jose,

That’s correct, I just fixed this. Thanks for letting me know!

Rene

Hi,

What ios you are using for H1 ,H2 and routers ?

Thanks

Hi Sims,

I believe I used Cisco VIRL for this with the latest IOSv image:

R1#show version

Cisco IOS Software, IOSv Software (VIOS-ADVENTERPRISEK9-M), Version 15.6(2)T, RELEASE SOFTWARE (fc2)

Rene

Just to let you know, if your image is a layer 3 device, then for the config to work as Rene has put it, with default-gateway, you need to “no ip routing”.

Otherwise, just do a default route.

Usually the method used in the lab is for Layer 2 devices.

That’s right, it’s either this:

R1(config)#ip route 0.0.0.0 0.0.0.0 192.168.1.254

Or this:

R1(config)#no ip routing

R1(config)#ip default-gateway 192.168.1.254

The ip default-gateway command doesn’t do anything until routing is disabled.

“We’ll discuss sparse-dense mode in another lesson”

Did you create a lesson yet for this?

Hi Chris,

I see I never added it, will try to do so soon.

Hi Rene, Thanks for briefing us on dense mode, graft message, etc.

I dont see graft v2 msg while doing the same lab while connect H3 to igmp group 239.1.1.1.

Is it because any specific reason?

PIM debugging is on

*Jul 28 05:39:47.672: PIM(0): Building Graft message for 239.1.1.1, Eth ernet0/0: no entries

*Jul 28 05:39:47.672: PIM(0): Building Graft message for 239.1.1.1, Eth ernet0/3: no entries

*Jul 28 05:39:47.672: PIM(0): Building Graft message for 239.1.1.1, Eth ernet0/2: no entries

*Jul 28 05:40:41.660: PIM(0): Received v2 Assert on Ethernet0/2 from 19 2.168.23.2

*Jul 28 05:40:41.660: PIM(0): Assert metric to source 192.168.1.1 is [1 10/20]

*Jul 28 05:40:41.660: PIM(0): We win, our metric [110/20]

*Jul 28 05:40:41.660: PIM(0): Schedule to prune Ethernet0/2

*Jul 28 05:40:41.660: PIM(0): (192.168.1.1/32, 239.1.1.1) oif Ethernet0 /2 in Forward state

*Jul 28 05:40:41.660: PIM(0): Send v2 Assert on Ethernet0/2 for 239.1.1 .1, source 192.168.1.1, metric [110/20]

*Jul 28 05:40:41.661: PIM(0): Assert metric to source 192.168.1.1 is [1 10/20]

*Jul 28 05:40:41.661: PIM(0): We win, our metric [110/20]

*Jul 28 05:40:41.661: PIM(0): (192.168.1.1/32, 239.1.1.1) oif Ethernet0 /2 in Forward state

*Jul 28 05:40:41.661: PIM(0): Received v2 Join/Prune on Ethernet0/2 fro m 192.168.23.2, to us

*Jul 28 05:40:41.661: PIM(0): Prune-list: (192.168.1.1/32, 239.1.1.1)

*Jul 28 05:40:41.661: PIM(0): Prune Ethernet0/2/239.1.1.1 from (192.168 .1.1/32, 239.1.1.1)

Hi Vinod,

In your output I see it is building the graft message but it doesn’t show anything about sending/receiving it.

Couple of things to check:

- R1 and R3 are PIM neighbors?

- Is the interface between R1-R3 currently pruned?

- Does R1 show anything in its debugs?

- Try a packet capture between R1-R3 to check if you see any graft messages.

Rene

Hi Rane,

I could see PIM neibhorship is up but not showing port in Outgoing interface list. What could be reason and how to troubleshoot it.

R1#sh ip pim interface

Address Interface Ver/ Nbr Query DR DR

Mode Count Intvl Prior

192.168.1.254 Ethernet0/0 v2/S 0 30 1 192.168.1.254

192.168.14.1 Ethernet0/1 v2/S 1 30 1 192.168.14.4

192.168.12.1 Ethernet0/3 v2/S 1 30 1 192.168.12.2

R1#

R1#

R1#sh ip mro

R1#sh ip mroute

IP Multicast Routing Table

Flags: D - Dense, S - Sparse, B - Bidir Group, s - SSM Group, C - Connected,

L - Local, P - Pruned, R - RP-bit set, F - Register flag,

T - SPT-bit set, J - Join SPT, M - MSDP created entry, E - Extranet,

X - Proxy Join Timer Running, A - Candidate for MSDP Advertisement,

U - URD, I - Received Source Specific Host Report,

Z - Multicast Tunnel, z - MDT-data group sender,

Y - Joined MDT-data group, y - Sending to MDT-data group,

V - RD & Vector, v - Vector

Outgoing interface flags: H - Hardware switched, A - Assert winner

Timers: Uptime/Expires

Interface state: Interface, Next-Hop or VCD, State/Mode

(*, 224.0.1.40), 00:40:15/00:02:53, RP 0.0.0.0, flags: DCL

Incoming interface: Null, RPF nbr 0.0.0.0

Outgoing interface list:

Ethernet0/0, Forward/Sparse, 00:40:13/00:02:53

R1#

Hi

I would like to clear my doubt .

When multicast traffic hits on R1 and R2 these routers create (,g) entry in their multicast routing table along with s,g entry .Could you tell me why (,g) is required for this communication ?

I am not able to understand why these routers created this entry .

Hello Ratheesh

In the lesson shown, there is a (*, 239.1.1.1) entry that appears in the multicast routing table. This appears only after H2 has had the ip igmp join-group 239.1.1.1 command applied. This means that an entry has been created in the multicast routing table because of the join request coming from H2. No traffic has yet been sent for this group, so there is no information about the source, and this is why we have a (*,g) entry.

Once traffic begins to traverse the network, we have information about the source which in the case of the lesson is S1. This is why we have a second entry of (192.168.1.1, 239.1.1.1) which is an (S,G) entry which routes the actual traffic from specific source to specific destination.

I hope this has been helpful!

Laz

Dear Laz,

But even without the ip igmp join command there are (*,G) + (S,G) entry in the routing table when we just send the feed. Why is that?

Hello Roshan

Yes, this is the case because PIM sparse mode uses an RP and the Auto RP discovery protocol to automatically find the RP in the network. This will use the multicast group address 224.0.1.40. This is why you will see (*, 224.0.1.40) in the output of the lesson even before the command is initiated.

However, it is once the ip igmp join-group 239.1.1.1 command is implemented that you will see the specific route for that group as shown in the lesson.

As for the timers you see in the show ip mroute output, these are the “Uptime/Expires” timers. The first indicates how long the entry has been in the IP multicast routing table while the second states how long until the entry will be removed from the table if no additional traffic is seen.

I hope this has been helpful!

Laz

Dear Laz,

thanks for the reply.

About the timers here in the 2nd underlined row, 00:01:11 seems ending at 3 min and set to zero again and 00:01:48 seems to be starting from 3 min and counting down to zero and resets to 3 min back. What is the meaning of that?

Hello Roshan

I was hasty to respond and forgot to add the information for the second set of timers. The explanation I gave before had to do with the first set of timers you indicated.

The second set of timers which correspond to a particular interface appear in what is known as the Outgoing Interface List or OIL. This is a list of interfaces that correspond to each of the entries in the mroute table. In the example you gave, there is only one interface, and that is Ethernet 0/1. Each interface in the OIL has a set of timers that correspond to it that indicate something different than the Uptime/Expiry timers when using Dense Mode.

There are two states of an interface that is in the OIL. Forward or Prune. When in the Forward state, the expiry timer does not get decremented. In some IOS versions, it simply says “stopped” and in some others it has a value of zero. You can see an example of this in the output of your example where there are a couple of routes for which the interface is in Forward/Dense state, and the expiry timer says “stopped”.

The second state is the pruned state in which the interface where you have indicated the underlines exists. When there are no receivers for a particular route, the router will send a prune message upstream. Once the router determines that it must prune an interface from the OIL, it starts a 3 minute timer after which the prune state times out.

I hope this has been helpful!

Laz

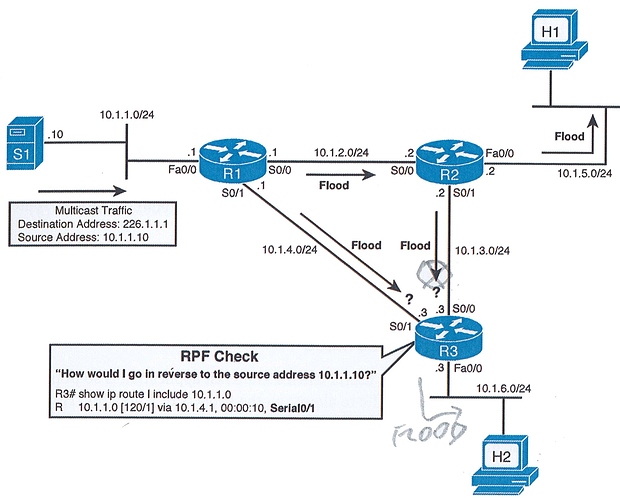

Hi Rene and staff,

sorry if my question is basic, i am new in multicast and try to undestand how dense mode can avoid loops

Here is the pic

R3 receive the multicast packet from R2 via s0/0: due to RPF the packet is discarded

R3 receive the same multicast packet from R1 via s0/1: due to split horizon it is not forwarded via this interface

But, with these two rules, you cannot have the end of the story.

Why R3 do not forward the packet from s0/1 to s0/0 ?

Regards