This topic is to discuss the following lesson:

hey Rene ,great info! would the auto-rp mapping agent behind spoke problem that you mentioned in the previous post also get solved by configuring pim nbma-mode on r1 serial0/0?

Hi Rene,

Is the mapping agent behind spoke issue also can be solve by this command ip pim NBMA-mode ?

Davis

Hi Davis,

I’m afraid not. The AutoRP addresses 224.0.1.39 and 224.0.1.40 are using dense mode flooding and this is not supported by NBMA mode. If your mapping agent is behind a spoke router then you’ll have to pick one of the three options to fix this:

- Get rid of the point-to-multipoint interfaces and use sub-interfaces. This will allow the hub router to forward multicast to all spoke routers since we are using different interfaces to send and receive traffic.

- Move the mapping agent to a router above the hub router, make sure it's not behind a spoke router.

- Create a tunnel between the spoke routers so that they can learn directly from each other.

Ok. Thanks Rene.

Davis

Hi Rene,

Could you please confirm if i need to use this ip pim nbma mode while i am running multicast in DMVPN hub and sopke topology.

Just like your topology R1 is Hub and R2, R3 are DMVPN spoke. I need to configured hub and spoke tunnel as ip ospf network point-to-miltipoint to match certain requirement. Do i need to use “ip pim nbma mode” here or not can you please tell me why not here (This DMVPN environment).

Hello Abdus

Yes you will have to use the ip pim nbma mode command in order to get your topology to function correctly. However, another option is to use point to point subinterfaces and by placing each spoke connection into a different subnet. This would solve the issue as well.

I hope this has been helpful!

Laz

Hi Rene,

If the environment is DMVPN instead of frame-relay and the phase is phase 3 so the spokes router communicate with each other directly without going through the hub router, and let assume the pim mode is sparse-mode and the hub router is RP and the multicast server is behind one of the spoke and the receiver is behind the other spoke, the multicast stream first will go to the hub router because of nhrp multicast mapping, the hub router will then forward this multicast traffic to the other spoke and this done by enabling pim nbma-mode in hub router, when the other spoke router receive this multicast traffic it will check the source address to join SPT instead of RPT, and in this case the multicast traffic must go directly from spoke to spoke, my question is how that could happen since we do not have nhrp multicast mapping between spokes routers ?? in this case do we need to configure nhrp multicast mapping between the spokes router to solve this ??? or the pim nbma-mode can solve this problem ??? or there are something else can deal with this situation ???

Hi Hussein,

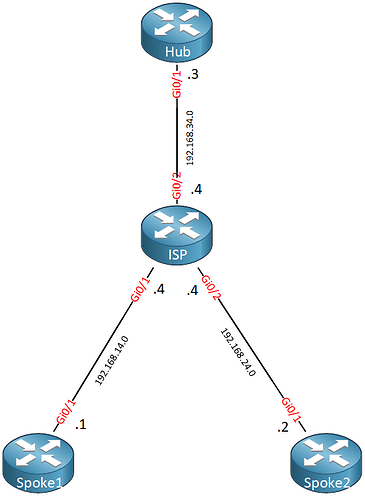

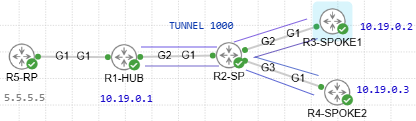

I first thought you would need ip pim nbma-mode on the hub tunnel interface but in reality, we don’t. For example, take this topology:

Then add these additional commands:

Hub, Spoke1 & Spoke2

(config)#ip multicast-routing

(config)#interface Tunnel 0

(config-if)#ip pim sparse-mode

(config)#ip pim rp-address 1.1.1.1

The loopback of the hub is the RP so I need PIM sparse mode there too, and we need NBMA mode on the tunnel:

Hub(config)#interface Loopback 0

Hub(config-if)#ip pim sparse-mode

On the spoke, routers I’ll add a LAN interface that I’ll use as the source and receiver.

Spoke1:

Spoke1(config)#interface GigabitEthernet 0/0

Spoke1(config-if)#ip address 192.168.1.1 255.255.255.0

Spoke1(config-if)#ip pim sparse-mode

Spoke1(config)#router ospf 1

Spoke1(config-router)#network 192.168.1.0 0.0.0.255 area 1

Spoke2:

Spoke2(config)#interface GigabitEthernet 0/0

Spoke2(config-if)#ip address 192.168.3.3 255.255.255.0

Spoke2(config-if)#ip pim sparse-mode

Spoke2(config)#router ospf 1

Spoke2(config-router)#network 192.168.3.0 0.0.0.255 area 1

Let’s join a multicast group:

Spoke2(config)#interface GigabitEthernet 0/0

Spoke2(config-if)#ip igmp join-group 239.1.1.1

And send some pings:

Spoke1#ping 239.1.1.1 source GigabitEthernet 0/0 repeat 5

Type escape sequence to abort.

Sending 5, 100-byte ICMP Echos to 239.1.1.1, timeout is 2 seconds:

Packet sent with a source address of 192.168.1.1

Reply to request 0 from 192.168.3.3, 14 ms

Reply to request 1 from 192.168.3.3, 13 ms

Reply to request 2 from 192.168.3.3, 7 ms

Reply to request 3 from 192.168.3.3, 12 ms

Reply to request 4 from 192.168.3.3, 9 ms

No problem at all. If you do enable NBMA mode on the hub, you’ll see a lot of duplicate packets. In case you want to see for yourself, here are the configs:

Spoke1-show-run-2018-02-16-11-20-02-clean.txt (880 Bytes)

Hub-show-run-2018-02-16-11-20-01-clean.txt (762 Bytes)

Spoke2-show-run-2018-02-16-11-20-04-clean.txt (879 Bytes)

Rene

Thanks @ReneMolenaar

Now I know it’s working without PIM NBMA mode but I have something unintelligible about how the multicast traffic go directly from spoke to spoke since we do not have any multicast mapping between spokes routers so only unicast traffic are allowed between them ?? can you please explain what exactly happened behind the scene ??

Hi Hussein,

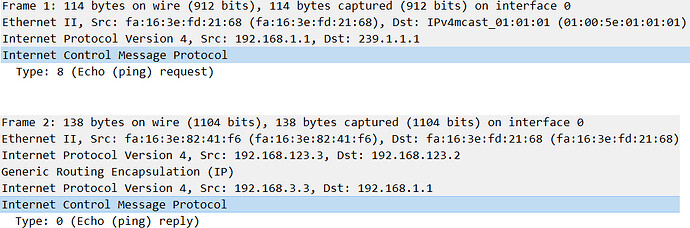

I had to think about this for awhile and do another lab…something interesting happened ![]() With the DMVPN topology that I usually use (switch in the middle), multicast traffic went directly from spoke1 to spoke2. Take a look at this Wireshark capture:

With the DMVPN topology that I usually use (switch in the middle), multicast traffic went directly from spoke1 to spoke2. Take a look at this Wireshark capture:

The ICMP request from spoke1 isn’t encapsulated. The reply from spoke2 is.

So I labbed this up again, replaced the switch in the middle with a router. When you do this, all multicast traffic from spoke1 to spoke2 goes through the hub (because we have a static multicast map for the hub IP address). There is no direct spoke-to-spoke multicast traffic.

The only way to achieve that is by adding the ip nhrp map multicast command with the IP address of the remote spoke. Not a feasible solution as it doesn’t scale well. With two spoke routers it’s no problem but if you want multicast traffic between all spoke routers then you’ll need a full mesh of “ip nhrp map multicast” commands.

If you want to see it for yourself, here’s the physical topology I used:

And the configurations are here:

Spoke2-show-run-2018-03-23-14-53-06-clean.txt (1.1 KB)

Hub-show-run-2018-03-23-14-53-01-clean.txt (932 Bytes)

Spoke1-show-run-2018-03-23-14-53-03-clean.txt (1.1 KB)

ISP-show-run-2018-03-23-14-53-08-clean.txt (471 Bytes)

Rene

Hello Rene,

Why did you withdraw Multicast Boundary Filtering lessson? It’s very interesting I think.

Please, put it back.

Warm Regards!

Ulrich

Hello Ulrich,

We didn’t take it down, it’s still here:

Did you find a link somewhere that doesn’t work anymore?

Rene

Thank you again @ReneMolenaar,

Now the logic of multicast mapping in my mind is Satisfied ![]() .

.

But in this case you need to use pim-nbma mode in the tunnel interface of the hub router to disable RPF rule and solve the split horizon problem, and I did not see you configured it in the attached configuration in your comment.

Second question come to my mind when I was typing this reply which is how can pim-nbma mode deal with pim prune message ??

Also I was wondering when you are using the switch why the ICMP request from Spoke 1 are not encapsulated in GRE and use NBMA address instead ?? And is this apply to all traffic or only ICMP traffic ??

Hi Hussein,

I just redid this lab on some real hardware and I think this is some Cisco VIRL quirk. When I run it on VIRL, I don’t need to use PIM nbma mode. On real hardware, I do need it.

Spoke1-VIRL#show version | include 15

Cisco IOS Software, IOSv Software (VIOS-ADVENTERPRISEK9-M), Version 15.6(3)M2, RELEASE SOFTWARE (fc2)Spoke1-REAL#show version | include 15

Cisco IOS Software, 2800 Software (C2800NM-ADVENTERPRISEK9-M), Version 15.1(4)M7, RELEASE SOFTWARE (fc2)Let’s join a group:

Spoke2(config)#interface gi0/0

Spoke2(config-if)#ip igmp join-group 239.3.3.3The hub creates some entries.

Cisco VIRL:

Hub#

MRT(0): Create (*,239.3.3.3), RPF (unknown, 0.0.0.0, 0/0)

MRT(0): WAVL Insert interface: Tunnel0 in (* ,239.3.3.3) Successful

MRT(0): set min mtu for (3.3.3.3, 239.3.3.3) 0->1476

MRT(0): Add Tunnel0/239.3.3.3 to the olist of (*, 239.3.3.3), Forward state - MAC not built

MRT(0): Add Tunnel0/239.3.3.3 to the olist of (*, 239.3.3.3), Forward state - MAC not built

MRT(0): Set the PIM interest flag for (*, 239.3.3.3)Real hardware:

Hub#

PIM(0): Received v2 Join/Prune on Tunnel0 from 172.16.123.2, to us

PIM(0): Join-list: (*, 239.3.3.3), RPT-bit set, WC-bit set, S-bit set

PIM(0): Check RP 3.3.3.3 into the (*, 239.3.3.3) entry

PIM(0): Adding register decap tunnel (Tunnel2) as accepting interface of (*, 239.3.3.3).

MRT(0): Create (*,239.3.3.3), RPF /0.0.0.0

MRT(0): WAVL Insert interface: Tunnel0 in (* ,239.3.3.3) Successful

MRT(0): set min mtu for (3.3.3.3, 239.3.3.3) 0->1476

MRT(0): Add Tunnel0/239.3.3.3 to the olist of (*, 239.3.3.3), Forward state - MAC not built

PIM(0): Add Tunnel0/172.16.123.2 to (*, 239.3.3.3), Forward state, by PIM *G Join

MRT(0): Add Tunnel0/239.3.3.3 to the olist of (*, 239.3.3.3), Forward state - MAC not builtThen I send some traffic from spoke1:

Spoke1#ping 239.3.3.3 repeat 1000

Type escape sequence to abort.

Sending 1000, 100-byte ICMP Echos to 239.3.3.3, timeout is 2 seconds:

Reply to request 0 from 10.255.1.190, 40 ms

Reply to request 1 from 10.255.1.190, 13 ms

Reply to request 2 from 10.255.1.190, 13 msHere’s what the hub does:

Cisco VIRL:

Hub#

MRT(0): Reset the z-flag for (10.255.1.189, 239.3.3.3)

MRT(0): Create (10.255.1.189,239.3.3.3), RPF (unknown, 192.168.34.4, 1/0)

MRT(0): WAVL Insert interface: Tunnel0 in (10.255.1.189,239.3.3.3) Successful

MRT(0): set min mtu for (10.255.1.189, 239.3.3.3) 18010->1476

MRT(0): Add Tunnel0/239.3.3.3 to the olist of (10.255.1.189, 239.3.3.3), Forward state - MAC not built

MRT(0): Reset the z-flag for (172.16.123.1, 239.3.3.3)

MRT(0): (172.16.123.1,239.3.3.3), RPF install from /0.0.0.0 to Tunnel0/172.16.123.1

MRT(0): Set the F-flag for (*, 239.3.3.3)

MRT(0): Set the F-flag for (172.16.123.1, 239.3.3.3)

MRT(0): Create (172.16.123.1,239.3.3.3), RPF (Tunnel0, 172.16.123.1, 110/1000)

MRT(0): Set the T-flag for (172.16.123.1, 239.3.3.3)

MRT(0): Update Tunnel0/239.3.3.3 in the olist of (*, 239.3.3.3), Forward state - MAC not built

MRT(0): Update Tunnel0/239.1.1.1 in the olist of (*, 239.1.1.1), Forward state - MAC not builtReal hardware:

Hub#

PIM(0): Received v2 Register on Tunnel0 from 172.16.123.1

for 1.1.1.1, group 239.3.3.3

PIM(0): Adding register decap tunnel (Tunnel2) as accepting interface of (1.1.1.1, 239.3.3.3).

MRT(0): Reset the z-flag for (1.1.1.1, 239.3.3.3)

MRT(0): (1.1.1.1,239.3.3.3), RPF install from /0.0.0.0 to Tunnel0/172.16.123.1

MRT(0): Create (1.1.1.1,239.3.3.3), RPF Tunnel0/172.16.123.1

PIM(0): Insert (1.1.1.1,239.3.3.3) join in nbr 172.16.123.1's queue

PIM(0): Building Join/Prune packet for nbr 172.16.123.1

PIM(0): Adding v2 (1.1.1.1/32, 239.3.3.3), S-bit Join

PIM(0): Send v2 join/prune to 172.16.123.1 (Tunnel0)

PIM(0): Received v2 Register on Tunnel0 from 172.16.123.1

for 1.1.1.1, group 239.3.3.3

MRT(0): Set the T-flag for (1.1.1.1, 239.3.3.3)

PIM(0): Removing register decap tunnel (Tunnel2) as accepting interface of (1.1.1.1, 239.3.3.3).

PIM(0): Installing Tunnel0 as accepting interface for (1.1.1.1, 239.3.3.3).

PIM(0): Received v2 Join/Prune on Tunnel0 from 172.16.123.2, to us

PIM(0): Join-list: (1.1.1.1/32, 239.3.3.3), S-bit set

PIM(0): Insert (1.1.1.1,239.3.3.3) join in nbr 172.16.123.1's queue

PIM(0): Building Join/Prune packet for nbr 172.16.123.1

PIM(0): Adding v2 (1.1.1.1/32, 239.3.3.3), S-bit Join

PIM(0): Send v2 join/prune to 172.16.123.1 (Tunnel0)

PIM(0): Received v2 Register on Tunnel0 from 172.16.123.1

for 1.1.1.1, group 239.3.3.3

PIM(0): Send v2 Register-Stop to 172.16.123.1 for 1.1.1.1, group 239.3.3.3I need to add IP PIM nbma mode on my real hardware to make it work. The output of the debugs is a bit different but that’s probably related to the IOS version. There’s probably also something funny happening with the switch on VIRL.

About the pruning, without NBMA mode spokes don’t see each other prune messages so they won’t be able to send a prune override message. Once you enable NBMA mode, the hub treats each spoke as a seperate “connection” so it forwards prunes to all spokes, allowing spokes to send prune override messages.

Rene

Very good explanation thank you very much @ReneMolenaar now everything is OK.

Hello,

Thanks for the lesson. I have a doubt:

Rene, on of the answers to one of the users, said:

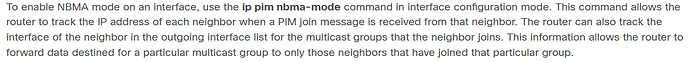

However, Cisco documentation says…

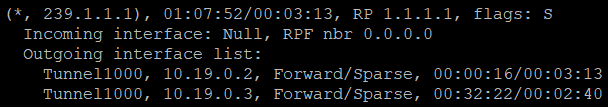

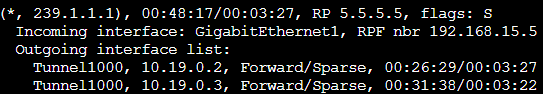

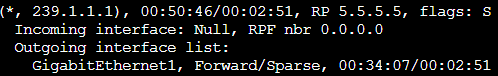

So I was a little confused: when a Spoke sends a Leave Message, I was assuming that the router, since is tracking the IP adderess of each PIM Join message previously sent by both Spokes, it will only prune the entry in the OIL for the specific neighbor. FOr example, if I have SPOKE 1 (10.19.0.2) and SPOKE2 (10.19.0.3) and they both join 239.1.1.1, then with PIM NBMA Mode enabled on the HUB I see:

HUB MRT

Now, if SPOKE 1 (10.19.0.2) sends a Leave message, then what I see is that the HUB inmediately deletes the entry for Tunnel1000, 10.19.0.2 in the OIL, leaving the other entries unpruned.

So I am assuming that no prune override is needed in reality with NMBA Mode, because the HUB is able to differentiate PIM Join/Leave messages by the IP address. Is this correct or not?

The other option would be what Rene says (sending the PIM Leave to all other Spokes so then the Spokes send their Join Messages to override the Prune). But this looks to me a little unnecessary based on the NBMA-mode capabilities of tracking PIM Joins by the IP address.

Do you know which is the actual way is done?

Thanks,

Jose

Hello Jose

First of all, concerning Rene’s statement and the statement from the Cisco source:

You’re correct that the hub tracks which spoke joined which multicast group, so when a spoke sends a Prune (or stops sending Joins), the hub removes only that spoke’s interface (or neighbor) from the OIL for the multicast group.

Now, just to clarify, PIM doesn’t have “leave” messages. A router signals a leave by sending prune messages or stopping to send join refreshes, resulting in a timeout. Now having said that, without NBMA mode, the hub doesn’t forward those prune messages from one spoke to others — so spokes can’t respond with prune overrides. But with the command set, the hub forwards prune messages received from one spoke to the other spokes. giving the other spokes the opportunity to say they’re still interested in the traffic using prune overrides.

Now this is where it gets tricky. Yes, for directly connected spokes, this is indeed true. In NBMA mode, the hub tracks each neighbor individually and revmoes only that neighbor from the OIL when it receives a prune message or stops receiving joins.

HOWEVER, you still need prune overrides to prevent the hub from sending prune messages upstream to the hub. Imagine this scenario:

- Spoke1 sends a prune upstream (toward the hub).

- The hub forwards this Prune message to other spokes (because it’s NBMA mode) and it may send it upstream as well.

- Now Spoke2, which still wants the group, has a chance to say:

- “Wait, I still want this multicast group!” by sending a Prune Override.

- If Spoke2 doesn’t send a Prune Override:

- The upstream router (upstream to the hub) might prune the entire branch (even if Spoke2 wanted the traffic!).

So prune overrides are not always about which spokes are in the OIL, but also about preventing the upstream prune. Does that make sense?

I hope this has been helpful!

Laz

Hello Laz,

Thanks for the reply. However, I labbed this and the behaviour you described is not what happens, at least in CML:

Without PIM NBMA Mode enabled on R1 tunnel interface, when SPOKE 1’s interface lo0 is leaving 239.1.1.1, then a Prune Message is sent by R1 towards the RP. This message hits the HUB first, and then the RP. The RP ends up deleting interface G1 from the OIL and SPOKE2 ends up not receiving traffic anymore until it sends a PIM Join message. This behaviour is (as far as I know) normal and expected (please correct if not).

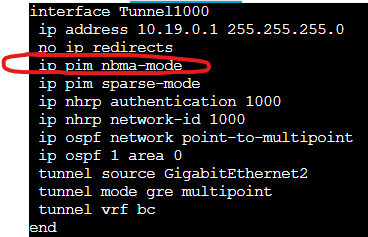

Now, to avoid this Prune / Unprune issue we use PIM NBMA Mode on the tunnel interface of the HUB:

HUB

and with the 2 SPOKES loopback interfaces joined to 239.1.1.1, the MRT on the HUB looks like:

The RP only lists G1 on the OIL, as expected:

R5-RP MRT

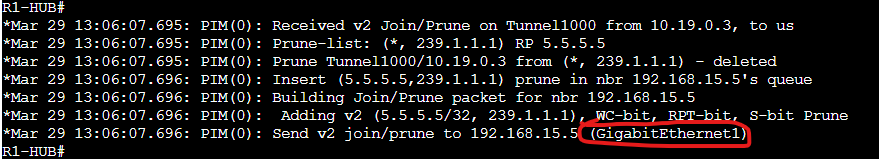

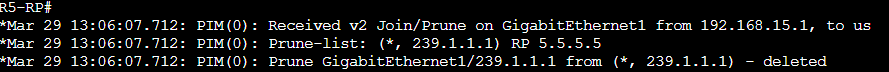

So I did the debugs and I issue the following command on SPOKE1 to generate a PIM Prune Message for 239.1.1.1:

SPOKE1 lo0

![]()

HUB

However, the HUB takes care of everything and does not even forward the Prune Message to the RP (neither to the other SPOKES), as long as there are more SPOKES interested in traffic for that group (in this case SPOKE2 is still joined).

And SPOKE2 does not receive any Prune message neither. I did the debug several times joining and unjoining on SPOKE 1 and no Prune message was received on SPOKE2), which means that the HUB, even with PIM NBMA mode, does not forward those PRUNE Messages to other spokes (and in my opinion there is no point on doing it with PIM NMBA mode enabled, because again, the HUB takes care of everything and does not forward Prune messages upstream unless there are no more SPOKES interested in traffic, like here:

SPOKE2

![]()

HUB

RP (now is when the RP receives the Prune message, when none of the SPOKES are interested in traffic!!)

Is curious to see how the HUB, when PIM NBMA mode is enabled, acts similar as IGMP Snooping Switch, by only forwarding the Prune message upstream to the RP when no more SPOKES are interested in traffic. However, the key difference is that here the HUB does not need to get reports right after the Prune is received to know that there area SPokes interested in traffic. It relies on the OIL (is that the reason why the HUB does not send the PRUNE message to the SPOKES?)!!

SO again, and sorry if I am being annoying, but I cannot undestand Rene answer that I listed on the previous post that I made: why is Prune Override needed with PIM NMBA mode, or more clearly, why a PIM NBMA enabled router should forward Prune Messages to the rest of the SPOKES if all it has to do is remove the correspondent IP address-interface mapping from the OIL ? (which is actually what happens, I have not achieved a lab with the behaviour that Rene says on his answer).

If Rene answer is right, what would be a scenario where that happens?

Thanks, I appreciate the help and interest,

Jose

Hello Jose

I appreciate your thoroughness and testing, and it’s useful for all of us! I stand corrected. Based on your experimentation in your particular setup, it seems that NBMA mode is unnecessary to have the hub and spokes function as they should. I will ask Rene to take a look at the lesson and consider making any clarifications necessary. Thanks again for your effort and sharing your results with us!

I hope this has been helpful!

Laz