This topic is to discuss the following lesson:

Hello Rene,

Couple of questions.

-

Is it possible to provide me an example to show the difference between congestion management and congestion avoidance. As a matter of fact, I do not understand TCP Global Synchronization very well. It will be great if you use an example to explain congestion management and avoidance by relating to TCP Global Synchronization.

-

This question is basically based upon Priority command VS Bandwidth command. Priority command is used to ensure the maximum bandwidth during the congestion. On the other hand, Bandwidth is used to ensure minimum bandwidth during congestion as you know. For example, Priority 10 Kb command will ensure the maximum of 10 Kb traffic during the congestion. On the other hand, Bandwidth 10 Kb will ensure minimum 10 Kb traffic during the congestion. Why does it work that way? What is the benefit? Would you please explain it with an example so I can visualize it?

Thank you so much.

Azm

Hi Azm,

Congestion management is about dealing with congestion when it occurs and congestion avoidance is trying to avoid congestion. To understand congestion avoidance, you have to think about how the TCP window size and global synchronization works. I explained this in this lesson:

Congestion avoidance works by dropping certain TCP segments so that the window size reduces, slowing down the TCP transmission and by doing so, preventing congestion from happening.

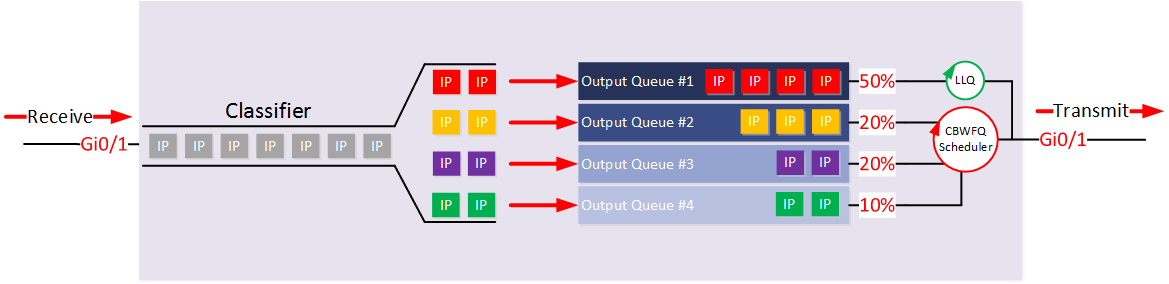

The difference between priority and bandwidth is about the scheduler. Take a look at this picture:

There are four output queues here. Q1 is attached to the “LLQ” scheduler and Q2, Q3 and Q4 are attached to the “CBWFQ” scheduler.

Packets in Q2, Q3 and Q4 are emptied in a weighted round robin fashion. Round robin means that we move between the queues like this: Q2 > Q3 > Q4 and back to Q2. Weighted means that we can serve more packets from one queue if we want to. For example, when it’s Q2’s turn we send 2 packets…Q3 also gets to send 2 packets but Q4 only gets to send 1 packet.

Q1 is “special” since it’s attached to the LLQ scheduler. When something ends up in Q1, it is transmitted immediately…even if there are packets in Q2, Q3, and Q4. Traffic in Q1 is always prioritized over these queues.

Q1 is our priority queue, configured with the priority command. Q2, Q3, and Q4 have a bandwidth guarantee because of our scheduler, configured with the bandwidth command.

I hope this helps!

Hello Rene,

Thanks a lot for your explanation. However, still needs some more clarification. Lets’ say I have an interface GIg 0/1 that is capable of handling 100 packets per second. I have three different kinds of traffic passing through the interface. They are Traffic A, B and C.

And the QoS is configured like this:

Traffic A: Priority 50 packets

Traffic B: Bandwidth 25 packets

Traffic C: Bandwidth 25 packets

I am just using packets per second instead of MB or KB per second.

As far as my understanding goes, QoS will work like this:

#Every second the interface will send out 100 packets totally, but QoS will make sure that out of those 100 packets, the interface will send 50 packets of Traffic A first and then 25 packets from Traffic B and 25 packets from Traffic C.Is it correct?

If I draw this, the queue will be like this:

B + C + A =========>>Out

25 + 25 + 50

or

C + B + A ===========>Out

25 + 25 + 50

Meaning Traffic A will be delivered first.

Is it correct?

Thank you so much.

Azm

Hello Azm

In your example, we assume that Traffic A has been configured to be attached to an LLQ scheduler, and that is what you mean by priority traffic. Also, we assume that Traffic B and C are functioning in a round robin format without weighting.

If this is the case, then yes this is correct.

I hope this has been helpful!

Laz

Hello Laz,

That was my question and that is the answer I was looking for. Thank you so much as usual.

Azm

Hi Rene,

That’s great explanation but I’m slightly confused with this. I understand that we’re only applying QoS on outbound for example our WAN interface to the ISP so the upload is prioritized . Does it mean he have to apply exactly same policy on outbound interface to LAN so the QoS applies to download ?

For example I’d like to reserve upload bandwidth and put it in priority queue on WAN interface and also reserve download bandwidth for my calls as they enter the LAN ?

Hello Laura

If you want to prioritise the download traffic as well, you are correct that you will have to implement it on the appropriate outbound interface. However, the choice of the location of the implementation is important. It would have to be placed on an interface where you know you will have congestion. The outbound interface of the LAN segment would probably not be a good choice because in most cases, the LAN connection is not the bottleneck. In the case of the topology in this lesson, the best place to implement it would be on the Gi0/1 interface of R3, which would be considered the location of the WAN connection into the network.

I hope this has been helpful!

Laz

Hello Laz,

I have a question and I am going to refer to the below configuration for my question.

class-map match-any VOICE

match dscp ef

match dscp cs5

match dscp cs4

policy-map NESTED-POLICY

class VOICE

priority percent 80

class class-default

bandwidth percent 20

fair-queue

random-detect dscp-based

policy-map INTERNET-OUT

class class-default

shape average 10000000

service-policy NESTED-POLICY

Interface Gig 1/0/1

service-policy output INTERNET-OUT

In this configuration, I have two different classes: Voice and other traffic. Here, 80 % bandwidth is allocated for Voice traffic and 20% bandwidth is allocated to other traffic.

That means, during congestion, Voice traffic can use 8M and other traffic can use 2M bandwidth since I am also shaping it to 10M.

Scenario:

Now, let’s say I have a scenario where I do not have any voice traffic traversing this device even though 80 % (8M) bandwidth is reserved for Voice traffic, but I have 5M other traffic trying to go out of this device constantly where only 20 % (2M) bandwidth is reserved for other traffic.

Question:

What is going to happen in this scenario?

As far as my understanding goes, QoS comes into play only during congestion. Even during congestion, if one class of traffic does not use its allocated bandwidth, other class of traffic can use the unused bandwidth. Therefore, this 5M other traffic should be able to go through the interface since there is no voice traffic at all so the voice bandwidth is unused completely.

But I have run into a situation where other traffic is not allowed to use more than 20 % (2M) of bandwidth even though there is no voice traffic at all. It looks like the router is reserving the 80 % bandwidth and not allowing other traffic to use it even though the voice bandwidth is unused. Would you please explain this to me?

I also like to thank you in advance as usual for your help.

Azm

Hi Azm,

On Cisco IOS routers, your priority and bandwidth commands only come into play when there is congestion. Your shaper is set to 10M, that is a hard limit.

When there is no voice traffic, other traffic should be able to get up to 10M. I loaded your config on a router and tried Iperf to demonstrate this:

$ iperf -c 192.168.2.2

------------------------------------------------------------

Client connecting to 192.168.2.2, TCP port 5001

TCP window size: 85.0 KByte (default)

------------------------------------------------------------

[ 3] local 192.168.1.1 port 56526 connected with 192.168.2.2 port 5001

[ ID] Interval Transfer Bandwidth

[ 3] 0.0-10.3 sec 12.0 MBytes 9.76 Mbits/sec

You can see this traffic is getting shaped at ~9.76 Mbps.

R1#show policy-map interface fa0/1

FastEthernet0/1

Service-policy output: INTERNET-OUT

Class-map: class-default (match-any)

9145 packets, 13280475 bytes

5 minute offered rate 81000 bps, drop rate 0 bps

Match: any

Queueing

queue limit 64 packets

(queue depth/total drops/no-buffer drops) 0/56/0

(pkts output/bytes output) 9089/13195691

shape (average) cir 10000000, bc 40000, be 40000

target shape rate 10000000

Service-policy : NESTED-POLICY

queue stats for all priority classes:

queue limit 64 packets

(queue depth/total drops/no-buffer drops) 0/0/0

(pkts output/bytes output) 0/0

Class-map: VOICE (match-any)

0 packets, 0 bytes

5 minute offered rate 0 bps, drop rate 0 bps

Match: dscp ef (46)

0 packets, 0 bytes

5 minute rate 0 bps

Match: dscp cs5 (40)

0 packets, 0 bytes

5 minute rate 0 bps

Match: dscp cs4 (32)

0 packets, 0 bytes

5 minute rate 0 bps

Priority: 80% (8000 kbps), burst bytes 200000, b/w exceed drops: 0

Class-map: class-default (match-any)

9145 packets, 13280475 bytes

5 minute offered rate 81000 bps, drop rate 0 bps

Match: any

Queueing

queue limit 64 packets

(queue depth/total drops/no-buffer drops/flowdrops) 0/56/0/0

(pkts output/bytes output) 9089/13195691

bandwidth 20% (2000 kbps)

Fair-queue: per-flow queue limit 16

Exp-weight-constant: 9 (1/512)

Mean queue depth: 9 packets

dscp Transmitted Random drop Tail/Flow drop Minimum Maximum Mark

pkts/bytes pkts/bytes pkts/bytes thresh thresh prob

default 9089/13195691 56/84784 0/0 20 40 1/10

Hello Rene,

Thank you so much for your help. Yes, this should be the expected result, but I am not sure why the router is acting funny. I may have to get with Cisco TAC. Thanks again though.

Azm

Hi NL Team,

On the section where PQ is configured and you ping with the TOS Byte set -

R1#ping 192.168.23.3 tos 184 repeat 100

When looking at the Output of the show policy-map interface command we see matches however I was under the impression traffic would not be sent to the any software queues unless the Hardware Queue was congested first? Are the matches shown in the output just saying "I see traffic but not actually sending it out to the Priority Queue yet). Will the router be using FIFO until congestion?

Another one Guys / Gals

If we use PQ - I assume then that any percent attached to the queue for WRR is ignored. I see in the diagram at the top of the tutorial the PQ has a 50% beside it. I assume this is ignored when the queue becomes a PQ?

Thanks ![]()

Hello Robert,

Queuing on IOS routers is always a software queue, the hardware queue is FIFO. Even when the interface is not congested, you’ll see these hits on the policy-maps. When there is no congestion, your priority queue can exceed the rate you configured. This will only be enforced when there is congestion.

To be honest, I’m not 100% sure if the software queues are active/inactive when the hardware interface is not congested. In some Cisco documents, you’ll find something like “the software queue is inactive” when there is no congestion on the hardware interface which means that the hits on the policy-map are only cosmetic.

Here’s an example:

During periods with light traffic, that is, when no congestion exists, packets are sent out the interface as soon as they arrive. During periods of transmit congestion at the outgoing interface, packets arrive faster than the interface can send them. If you use congestion management features, packets accumulating at an interface are queued until the interface is free to send them; they are then scheduled for transmission according to their assigned priority and the queueing mechanism configured for the interface.

On the 2960/3560/3750 switches, it’s a different story as you’ll configure the hardware when you make changes to your queue(s).

Your priority queue still has a percentage, otherwise your other queues would starve if you have too much traffic in your priority queue.

The priority and bandwidth percentages that you set can be 75% in total. The remaining 25% is for “unclassified” traffic and routing traffic. If you have a 50% priority queue, you can still have three queues with let’s say 10%-5%-5% of bandwidth.

Hope this helps!

Rene

Hi,

For the priority percent command within priority queue config, is this based on the bandwidth represented with the bandwidth interface command? Or is it based on the current speed the interface is running at?

Thanks

Good lesson, but would be good to see further examples and general overview of queuing mechanisms.

WFQ, CBWFQ, PQ, flow based WFQ etc.

Not to mention the Congestion avoidance like RED, WRED CBWRED ![]()

Hello Robert

Cisco documentation is not 100% clear, however it seems to indicate that the percentage is actually the percentage of the physical interface itself. Based on this documentation:

https://www.cisco.com/c/en/us/td/docs/ios/12_0s/feature/guide/12sllqpc.html#wp1026343

it says that the total bandwidth is the bandwidth on the physical interface.

The following documentation also states that the bandwidth percent is a percentage of the underlying link rate.

Once again, not completely clear. There is some evidence that the bandwidth configuration of an interface is completely ignored in some cases depending on the type of interface in question. I believe that only an experiment with changing the bandwidth parameter of an interface will be able to answer that question completely.

I’ll let Rene add anything he sees fit to this question as well, from his experience.

I hope this has been helpful!

Laz

Hello Chris

Thanks so much for your suggestions! It’s always good to hear what people are most interested in, in order to provide the most useful content. I suggest you go to the Lesson Ideas area of the forum and submit your suggestions there. There are other members that I’m sure would want the same topics to be covered, so the more voices that are heard, the more likely they will be covered.

Once again, thanks for your invaluable input!!

I hope this has been helpful!

Laz

Hello Rene,

How could i simulate ping with tos value on Packet Tracer.

I’ve tried it but it didn’t work ! the DCSP value don’t change

Hello Mohamed

Packet tracer is capable of configuring QoS mechanisms where DSCP values are changed. Can you attempt to create such a lab in packet tracer and show us your results? Then we can help troubleshoot the specific problem you are facing.

Laz