Hello Michael

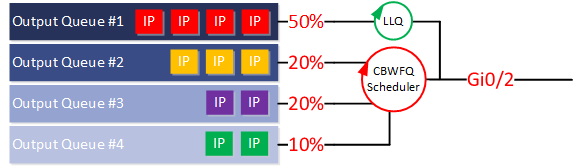

Let’s use this diagram from the lesson to help us out:

Notice that the interface is a GigabitEthernet interface, and that the priority queue is set to 50% of the total bandwidth. Now remember, if there’s no congestion, the above queues are non-existent, and all traffic is served immediately.

Now let’s assume we have congestion over an extended period of time, and we have a lot of priority traffic arriving at the interface. That priority traffic will go into the priority queue to be served immediately. However, the bandwidth of the priority queue has been limited to 50%. This means that only a bandwidth of up to 500 Mbps can use the priority queue. This is where the “implicit policer” I mentioned before comes in. Any packets above that 500 Mbps in the priority queue will be dropped.

This means that there is another 500 Mbps of bandwidth that is guaranteed for use by the CBWFQ for the non-priority traffic.

Now this is a little bit difficult for us to get our heads around because of the phrase “everything that ends up in queue 1 will be served before any of the other queues.” If there’s always something in queue 1, queues 2 to 4 will never be served!

We often think about dividing queues into sections of bandwidth, where queue 1 gets 500 Mbps, while the other queues combined get another 500 Mbps. But packets must enter the interface serially, meaning one at a time, one after the other. So to achieve the above mentioned bandwidths, the scheduling of the sending of packets from the queues occurs by sharing the use of the interface over time, something similar to time division multiplexing. For each second of time, 50% of the time will be devoted to serving queue 1, and 50% will be devoted to serving the other three queues, based on their percentages. These limitations are policed to ensure that bandwidth starvation will not occur.

I hope this has been helpful!

Laz