Hello,

I’d like to ask about how to calculate jitter.

I know that jitter is variation in one-way delay, and I also know that networking devices can be configured to report jitter. But what I’m interested in is what the logic is behind the manual calculation of jitter.

One way to manually calculate jitter is to issue a few pings via the Command Prompt (if you’re using Windows), and then averaging the time difference between each consecutive packet:

Can someone please give a mathematical explanation of why this works?

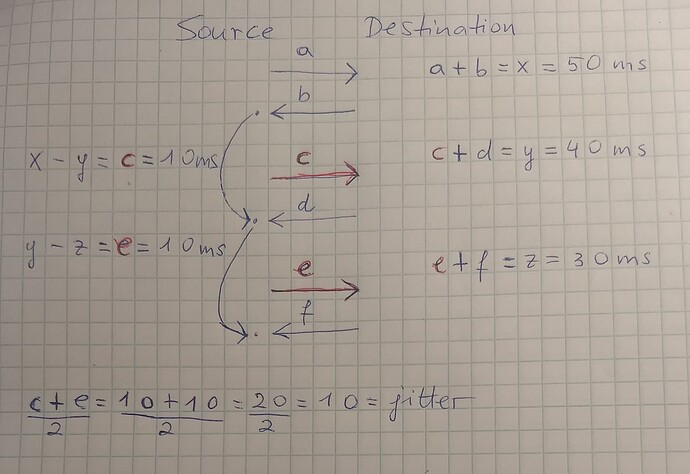

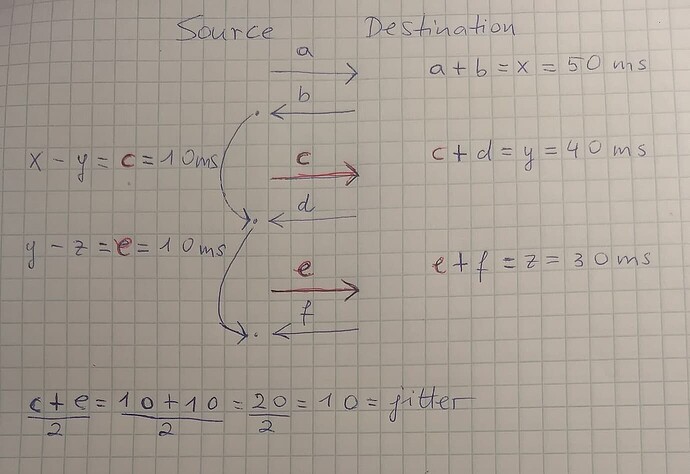

I also gave it a try and this is what I’ve come up with:

Here, I’m issuing 3 pings. Letters “x”, “y”, and “z” stand for the round trip times (i.e. the two way delays), and letters “a” to “f” stand for the one way trips (i.e. the one way delays). As you can see, the time it takes for the first packet (“a”) to be received (by the “Destination”) plus the second packet (“b”) to be received (by the “Source”) is equal to “x”, so the round trip time (i.e. two way delay) is:

a + b = x = 50 ms

The difference between “x” and “y” is “c”, and “c” is the time it takes for a packet to arrive at the “Destination” (so not round trip time, i.e. not two way delay, but the one way delay). Meaning, the time that elapsed between the first round trip time and the second round trip time is equal to the time that elapsed in-between the two, which is “c”.

So the way we calculate every second packet’s (one way) delay (i.e. “c”, “e”, etc) is by taking the difference of the sums of two consecutive packets. Of course, when I say “every second packet’s”, I mean starting after the third packet: “c” is the third packet, and then the second packet after “c” is “e”, and then the second packet after “e” would be “g” (not shown in the picture), etc.

So that’s how we can tease out the one-way delay of packets “c”, “e”, etc.

Then, we take their average. We do that by adding them all up, and dividing the resulting number by a number that’s equal to how many numbers we’ve added together. In this example, we’ve added 2 packets, so we divide by 2. That gives us the average one-way delay of 10 ms, which is the jitter.

Please note that I’ve just made up some easy numbers for the example. For example, “z” could have been larger than “y”: meaning “z” could have been 40 ms, and “y” 30 ms, but that wouldn’t have changed the result, because their difference would still be 10 ms.

Is this the solution?

Also, let’s say I issued this ping:

C:\Users\test>ping 1.1.1.1

Pinging 1.1.1.1 with 32 bytes of data:

Reply from 1.1.1.1: bytes=32 time=29ms TTL=58

Reply from 1.1.1.1: bytes=32 time=29ms TTL=58

Reply from 1.1.1.1: bytes=32 time=29ms TTL=58

Reply from 1.1.1.1: bytes=32 time=29ms TTL=58

Ping statistics for 1.1.1.1:

Packets: Sent = 4, Received = 4, Lost = 0 (0% loss),

Approximate round trip times in milli-seconds:

Minimum = 29ms, Maximum = 29ms, Average = 29ms

If the maximum RTT is 29ms, doesn’t that mean that the jitter must be smaller than 29ms? Because one instance of a RTT is the sum of 2 consecutive one way delays, so if their sum is 29ms, then they individually must be less than 29ms.

Thanks.

Attila