This topic is to discuss the following lesson:

Hello,

Thanks for another awesome lesson!

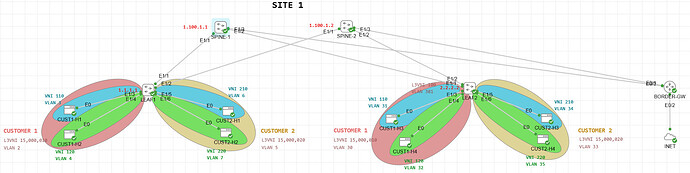

I have a doubt. I wanted to know how the following situation is handled: let’s say I have a company that provides IaaS to any global customer. I own a DC and I decide to use VXLAN because of all its benefits. Customer’s VMs are in servers connected to switches that are trunked to the LEAF Switches (or LEAF is the actual vSwitch if it supports VXLAN).

So far I can get the multi-tenancy working good. Isolation between customer works, and L2 and L3 between VNIs of the same customer is working too. I am using VXLAN with eBGP Multi-AS on the underlay.

Now I want to offer customers the possibility to connect their VMs to the public Internet. Some customers might want this for their own reason. Therefore, I need to advertise a default route from the Spine switches to the LEAF switches. But I need such default route to be imported on the desired customer VRF on the LEAF.

So I thought one possibility is to configure all VRFs on the BORDER-GW router and on the SPINE switches, and then have to advertise over each BGP VRF Address family the default route, expecting that the SPINES readvertise it to the LEAF switches keeping Extended Community RT and therefore successfully importing it on the desired customer VRF Routing Table. Any thought on this? Is this recommended? I don’t like the fact of having to configure BGP VRF AF on the SPINE for every desired customer VRFs.

I wanted to see if Laz or Rene could provide some guidance based on their experience.

Is there any other solutions? Do you know a solution that is actually used or “common” for this scenario?

Thanks,

Jose

Hello Jose

The problem you’re describing is commonly solved in modern VXLAN EVPN fabrics using the overlay control plane. The key insight is that tenant routing (including default routes) typically travels in the EVPN overlay using Type-5 routes with Route Targets, while the underlay (eBGP Multi-AS between spines/leafs) only carries VTEP loopbacks and infrastructure reachability. Spines don’t need to understand tenant VRFs, they just reflect/transit EVPN routes with extended communities intact. Remember, in most designs, the default route is originated or learned on the border gateway and then advertised into EVPN per tenant VRF.

There are some common variations that can be deployed:

Variation A: Per-Tenant VRF on Border (Most Common for IaaS)

- Border Gateway has VRF for each tenant needing Internet

- Clean isolation, scales well with automation

- Each VRF peers separately with firewall/upstream (or uses subinterfaces)

Variation B: External/Internet VRF + Route Leaking

- Single “Internet VRF” on Border Gateway facing firewall/ISP

- Use inter-VRF route leaking with RT manipulation to provide the default route only to selected tenant VRFs

- Reduces VRF sprawl at the edge but adds complexity in route policies

Variation C: Shared Services VRF

- Centralized services VRF with strict import/export controls

- Good when Internet access goes through centralized security services

Nice instincts on the network design!

I hope this has been helpful!

Laz

Thank you Laz. I ended up using VRF Lite and iBGP between my Edge router and the Spine switches (so your Per-Tenant VRF solution). I actually ended up putting the Edge router on SD-WAN and using the Service VPNs to peer with the different customer VRFs of the L3 VNIs that I had to include on the Spine switches for this to work. This excellent document provides the guidance for it:

I have a question: the problem that I found with this design is ROAS: with VRF lite or Per-Tenant VRF on the Spine Switch, then I had to configure ROAS on both the SPINE and the Edge router dedicating a subinterface per VRF. That is also what Cisco says to do on their document that I attached. Now, since I have 2 spines, I ended creating a multi-access area with the WAN Edge routers! The same thing I avoided with this complex VXLAN topologies on the Access layer now I just have it on the Core layer! Is this how it is supposed to be done? Or maybe use Tunnels to avoid ROAS?

Also, this implies another limitation and is scalability: on the Leaf switches I can scale up to more than 5000 customers if I include all of them in the count (this is, I might have 200 customers on a LEAF, 2000 different customers on another, then some mixed, …) but the challenge comes when the customer VRFs have to be configured on the SPINE switches with the L3 VNI. Since I only have 4095 (with reserved a little less) VLANs, I can only support that number of customers per spine considering that all my customers (which are more than 4095) need a L3 VNI (or even multiple). So at that point I would just have to distribute the L3 VNIs across the SPINEs? Is that how it is done in a real world scenario? Perhaps you or Rene have experienced this and can provide some insight.

Thanks,

Jose

Hello Jose

Although this is indeed one of the recommended approaches, it does have its limitations. Using VRF-Lite with subinterfaces (ROAS) on spine switches recreates the multi-access complexity you avoided at the access layer! This means that it may be best practice for certain scenarios, but you have identified the limitations and difficulties that can arise.

In typical spine-leaf VXLAN designs, spines should be tenant-agnostic (pure IP underlay with EVPN route reflector function). Border leaves (a dedicated pair, often vPC’d) are the recommended place to terminate tenant VRFs and provide external connectivity. By placing tenant VRFs on spines, you’re violating this separation of concerns, which can hurt scale and operational clarity.

However, in smaller deployments or specific design constraints like yours, spine-based external connectivity with proper design (vPC, active/standby routing) can work.

Tunnels are probably not a good solution to this. GRE and/or IPsec tunnels add MTU overhead, which is a fragmentation risk and add operational complexity.

This is indeed a hard limit that is due to 802.1Q constraints. In production multi-tenant networks with thousands of VRFs, you must distribute tenant VRFs across multiple border device pairs. This is called VRF sharding. For example:

- Border Set A (Spines 1 & 2 or Border Leafs 1 & 2): Handles Customers 1–2000

- Border Set B (Spines 3 & 4 or Border Leafs 3 & 4): Handles Customers 2001–4000

- Border Set C: Handles Customers 4001–6000

Your WAN edge/SD-WAN router must connect to multiple border sets (with separate physical ports or port-channels).

Ideally, we come back to the best practice of creating one or more dedicated border leaf pairs for external connectivity, but only if this is feasible. That way, you keep spines clean and tenant-agnostic, and it allows you to scale out border capacity independently.

As you can see there are several options for solutions that can be tried depending on the requirements and the limitations of your specific scenario. However, in my opinion, if you need ROAS and tenant VRFs on spines, the fabric has already outgrown that design.

Let me make clear that I don’t have hands-on experience with a deployment similar to the one you are describing, but the above does align with how large-scale VXLAN EVPN fabrics are typically designed. As such, my responses should be taken as guidelines for furthering your troubleshooting and experimentation. I look forward to hearing your results!

I hope this has been helpful!

Laz