Hi,

I’m seeing some strange results when playing around with mtu on a couple of directly connected routers:

R1—155.1.12.0/24----R2

R1 has the default mtu/ip mtu of 1500:

R1#sh int Gi0/1 | i MTU

MTU 1500 bytes, BW 1000000 Kbit/sec, DLY 10 usec,

R1#sh ip int Gi0/1 | i MTU

MTU is 1500 bytes

R2 has had its mtu/ip mtu changed to 1400:

R2#sh int Gi0/1 | i MTU

MTU 1400 bytes, BW 1000000 Kbit/sec, DLY 10 usec,

R2#sh ip int Gi0/1 | i MTU

MTU is 1400 bytes

So, I would expect the following ping from R1 to R2 to work, which it does:

R1#ping 155.1.12.2 size 1400

Type escape sequence to abort.

Sending 5, 1400-byte ICMP Echos to 155.1.12.2, timeout is 2 seconds:

!!!!!

Success rate is 100 percent (5/5), round-trip min/avg/max = 1/1/2 ms

and the following ping to fail (because I have exceeded R2’s MTU) but it doesn’t:

R1#ping 155.1.12.2 size 1401

Type escape sequence to abort.

Sending 5, 1401-byte ICMP Echos to 155.1.12.2, timeout is 2 seconds:

!!!!!

Success rate is 100 percent (5/5), round-trip min/avg/max = 2/2/3 ms

It is only when I increase the datagram size to > 1410 that is starts to fail:

R1#ping 155.1.12.2 size 1410

Type escape sequence to abort.

Sending 5, 1410-byte ICMP Echos to 155.1.12.2, timeout is 2 seconds:

!!!!!

Success rate is 100 percent (5/5), round-trip min/avg/max = 2/2/3 ms

R1#ping 155.1.12.2 size 1411

Type escape sequence to abort.

Sending 5, 1411-byte ICMP Echos to 155.1.12.2, timeout is 2 seconds:

.....

Success rate is 0 percent (0/5)

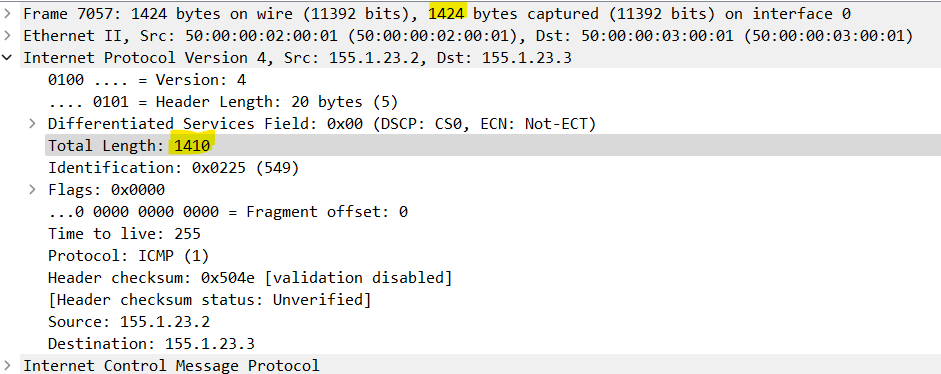

I did a packet capture using Wireshark and the frame size is indeed 1424.

So, why is an interface with an MTU of 1400 accepting frames with a payload of 1410?

Thanks,

Sam